Base_model: deepseek-ai/deepseek-coder-1.3b-instruct

Training_data: javadoc_v0.3.json (363MB Javadoc Dataset)

DatasetDict({

train: Dataset({

features: ['output', 'input', 'instruction'],

num_rows: 331686

})

test: Dataset({

features: ['output', 'input', 'instruction'],

num_rows: 17457

})

})

LORA Finetuning

LORA_R = 8

LORA_ALPHA = 16

LORA_DROPOUT= 0.05

LORA_TARGET_MODULES = [

"q_proj",

"v_proj",

"k_proj",

"o_proj"

]

WARMUP_STEPS = 100

OPTIM = "adamw_torch"

BATCH_SIZE = 128

MICRO_BATCH_SIZE = 8

GRADIENT_ACCUMULATION_STEPS = BATCH_SIZE // MICRO_BATCH_SIZE

LEARNING_RATE = 2e-4

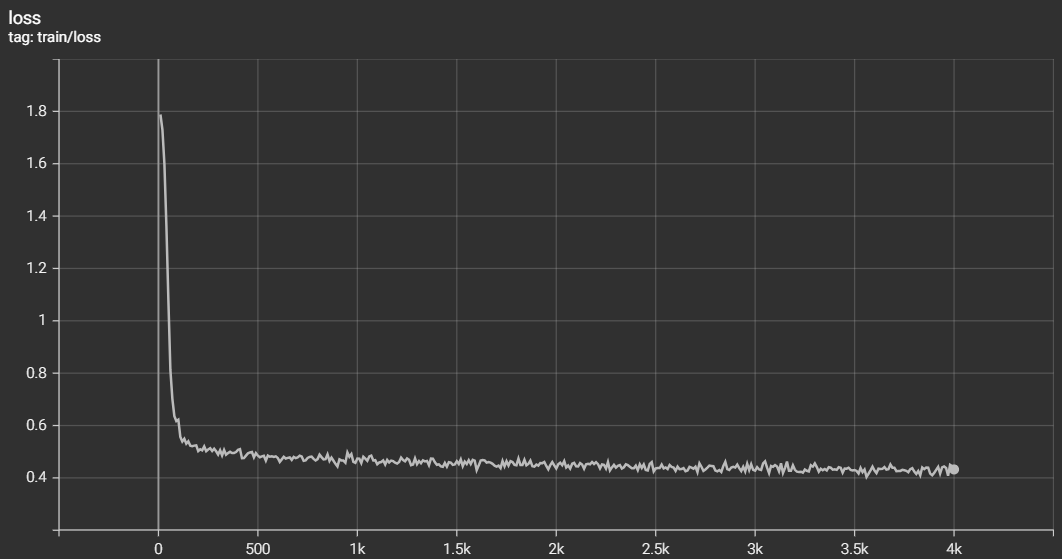

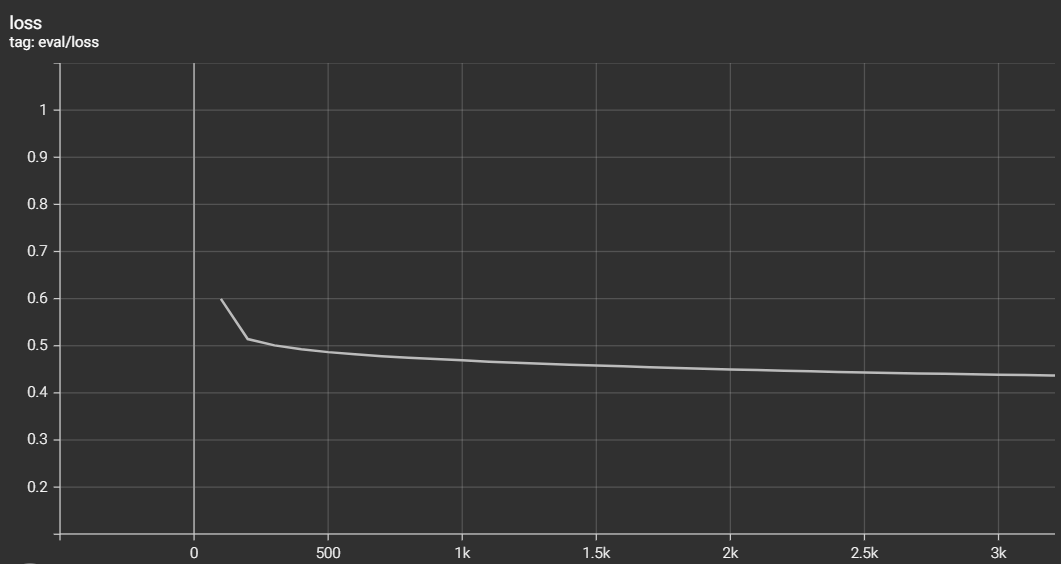

TRAIN_STEPS = 4000

trainable params: 3,145,728

all params: 1,349,617,664

trainable%: 0.2330829007288393

{'train_runtime': 599660.8522, 'train_samples_per_second': 0.854, 'train_steps_per_second': 0.007, 'train_loss': 0.4703766193389893, 'epoch': 1.54}